JBoss.orgCommunity Documentation

Abstract

The Narayana Project Documentation contains information on how to use Narayana to develop applications that use transaction technology to manage business processes.

- Preface

- 1. Narayana Core

- 1.1. Overview

- 1.2. Using ArjunaCore

- 1.3. Advanced transaction issues with ArjunaCore

- 1.3.1. Last resource commit optimization (LRCO)

- 1.3.2. Heuristic outcomes

- 1.3.3. Nested transactions

- 1.3.4. Asynchronously committing a transaction

- 1.3.5. Independent top-level transactions

- 1.3.6.

Transactions within

save_stateandrestore_statemethods - 1.3.7. Garbage collecting objects

- 1.3.8. Transaction timeouts

- 1.4. Hints and tips

- 1.5. Constructing a Transactional Objects for Java application

- 1.6. Failure Recovery

- 2. JTA

- 3. JTS

- 3.1. Administration

- 3.2. Development

- 3.3. ORB Portability

- 3.4. Quick Start to JTS/OTS

- 3.4.1. Introduction

- 3.4.2. Package layout

- 3.4.3. Setting properties

- 3.4.4. Starting and terminating the ORB and BOA/POA

- 3.4.5. Specifying the object store location

- 3.4.6. Implicit transaction propagation and interposition

- 3.4.7. Obtaining Current

- 3.4.8. Transaction termination

- 3.4.9. Transaction factory

- 3.4.10. Recovery manager

- 4. XTS

- 5. Long Running Actions (LRA)

- 6. RTS

- 7. STM

- 8. Compensating transactions

- 9. OSGi

- 10. Appendixes

This manual uses several conventions to highlight certain words and phrases and draw attention to specific pieces of information.

In PDF and paper editions, this manual uses typefaces drawn from the Liberation Fonts set. The Liberation Fonts set is also used in HTML editions if the set is installed on your system. If not, alternative but equivalent typefaces are displayed. Note: Red Hat Enterprise Linux 5 and later includes the Liberation Fonts set by default.

Four typographic conventions are used to call attention to specific words and phrases. These conventions, and the circumstances they apply to, are as follows.

Mono-spaced Bold

Used to highlight system input, including shell commands, file names and paths. Also used to highlight keycaps and key combinations. For example:

To see the contents of the file

my_next_bestselling_novelin your current working directory, enter the cat my_next_bestselling_novel command at the shell prompt and press Enter to execute the command.

The above includes a file name, a shell command and a keycap, all presented in mono-spaced bold and all distinguishable thanks to context.

Key combinations can be distinguished from keycaps by the hyphen connecting each part of a key combination. For example:

Press Enter to execute the command.

Press Ctrl+Alt+F2 to switch to the first virtual terminal. Press Ctrl+Alt+F1 to return to your X-Windows session.

The first paragraph highlights the particular keycap to press. The second highlights two key combinations (each a set of three keycaps with each set pressed simultaneously).

If source code is discussed, class names, methods, functions, variable names and returned values mentioned within a paragraph will be presented as above, in mono-spaced bold. For example:

File-related classes include

filesystemfor file systems,filefor files, anddirfor directories. Each class has its own associated set of permissions.

Proportional Bold

This denotes words or phrases encountered on a system, including application names; dialog box text; labeled buttons; check-box and radio button labels; menu titles and sub-menu titles. For example:

Choose → → from the main menu bar to launch Mouse Preferences. In the Buttons tab, click the Left-handed mouse check box and click to switch the primary mouse button from the left to the right (making the mouse suitable for use in the left hand).

To insert a special character into a gedit file, choose → → from the main menu bar. Next, choose → from the Character Map menu bar, type the name of the character in the Search field and click . The character you sought will be highlighted in the Character Table. Double-click this highlighted character to place it in the Text to copy field and then click the button. Now switch back to your document and choose → from the gedit menu bar.

The above text includes application names; system-wide menu names and items; application-specific menu names; and buttons and text found within a GUI interface, all presented in proportional bold and all distinguishable by context.

Mono-spaced Bold Italic or Proportional Bold Italic

Whether mono-spaced bold or proportional bold, the addition of italics indicates replaceable or variable text. Italics denotes text you do not input literally or displayed text that changes depending on circumstance. For example:

To connect to a remote machine using ssh, type ssh

username@domain.nameat a shell prompt. If the remote machine isexample.comand your username on that machine is john, type ssh john@example.com.The mount -o remount

file-systemcommand remounts the named file system. For example, to remount the/homefile system, the command is mount -o remount /home.To see the version of a currently installed package, use the rpm -q

packagecommand. It will return a result as follows:package-version-release.

Note the words in bold italics above — username, domain.name, file-system, package, version and release. Each word is a placeholder, either for text you enter when issuing a command or for text displayed by the system.

Aside from standard usage for presenting the title of a work, italics denotes the first use of a new and important term. For example:

Publican is a DocBook publishing system.

Terminal output and source code listings are set off visually from the surrounding text.

Output sent to a terminal is set in mono-spaced roman and presented thus:

books Desktop documentation drafts mss photos stuff svn books_tests Desktop1 downloads images notes scripts svgs

Source-code listings are also set in mono-spaced roman but add syntax highlighting as follows:

package org.jboss.book.jca.ex1;

import javax.naming.InitialContext;

public class ExClient

{

public static void main(String args[])

throws Exception

{

InitialContext iniCtx = new InitialContext();

Object ref = iniCtx.lookup("EchoBean");

EchoHome home = (EchoHome) ref;

Echo echo = home.create();

System.out.println("Created Echo");

System.out.println("Echo.echo('Hello') = " + echo.echo("Hello"));

}

}Finally, we use three visual styles to draw attention to information that might otherwise be overlooked.

Note

Notes are tips, shortcuts or alternative approaches to the task at hand. Ignoring a note should have no negative consequences, but you might miss out on a trick that makes your life easier.

Important

Important boxes detail things that are easily missed: configuration changes that only apply to the current session, or services that need restarting before an update will apply. Ignoring a box labeled 'Important' will not cause data loss but may cause irritation and frustration.

Warning

Warnings should not be ignored. Ignoring warnings will most likely cause data loss.

Please feel free to raise any issues you find with this document in our issue tracking system

- 1.1. Overview

- 1.2. Using ArjunaCore

- 1.3. Advanced transaction issues with ArjunaCore

- 1.3.1. Last resource commit optimization (LRCO)

- 1.3.2. Heuristic outcomes

- 1.3.3. Nested transactions

- 1.3.4. Asynchronously committing a transaction

- 1.3.5. Independent top-level transactions

- 1.3.6.

Transactions within

save_stateandrestore_statemethods - 1.3.7. Garbage collecting objects

- 1.3.8. Transaction timeouts

- 1.4. Hints and tips

- 1.5. Constructing a Transactional Objects for Java application

- 1.6. Failure Recovery

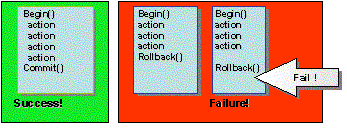

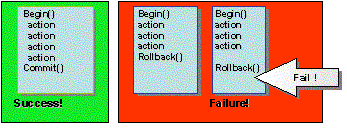

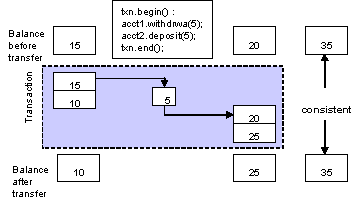

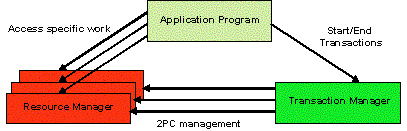

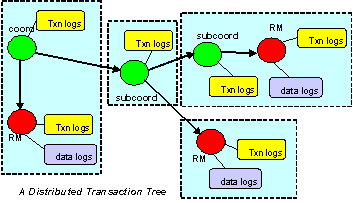

A transaction is a unit of work that encapsulates multiple database actions such that that either all the encapsulated actions fail or all succeed.

Transactions ensure data integrity when an application interacts with multiple datasources.

This chapter contains a description of the use of the ArjunaCore transaction engine and the Transactional Objects for Java (TXOJ) classes and facilities. The classes mentioned in this chapter are the key to writing fault-tolerant applications using transactions. Thus, they are described and then applied in the construction of a simple application. The classes to be described in this chapter can be found in the com.arjuna.ats.txoj and com.arjuna.ats.arjuna packages.

Stand-Alone Transaction Manager

Although Narayana can be embedded in various containers, such as WildFly Application Server, it remains a stand-alone transaction manager as well. There are no dependencies between the core Narayana and any container implementations.

In keeping with the object-oriented view, the mechanisms needed to construct reliable distributed applications are presented to programmers in an object-oriented manner. Some mechanisms need to be inherited, for example, concurrency control and state management. Other mechanisms, such as object storage and transactions, are implemented as ArjunaCore objects that are created and manipulated like any other object.

Note

When the manual talks about using persistence and concurrency control facilities it assumes that the Transactional Objects for Java (TXOJ) classes are being used. If this is not the case then the programmer is responsible for all of these issues.

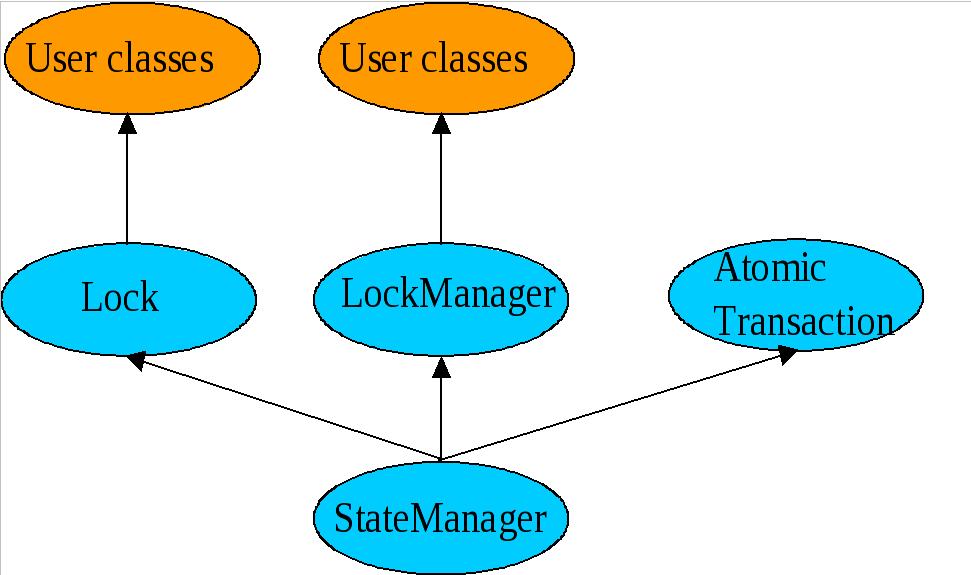

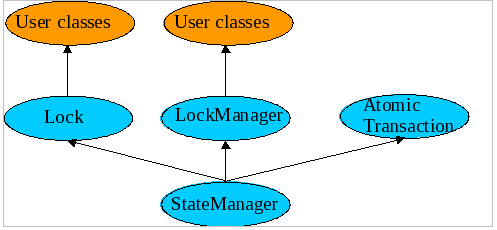

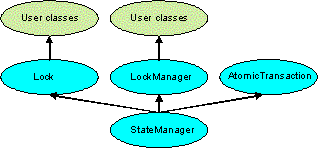

ArjunaCore exploits object-oriented techniques to present programmers with a toolkit of Java classes from which application classes can inherit to obtain desired properties, such as persistence and concurrency control. These classes form a hierarchy, part of which is shown in Figure 1.1, “ArjunaCore Class Hierarchy” and which will be described later in this document.

Apart from specifying the scopes of transactions, and setting appropriate locks within objects, the application programmer does not have any other responsibilities: ArjunaCore and TXOJ guarantee that transactional objects will be registered with, and be driven by, the appropriate transactions, and crash recovery mechanisms are invoked automatically in the event of failures.

ArjunaCore needs to be able to remember the state of an object for several purposes.

- recovery

-

The state represents some past state of the object.

- persistence

-

The state represents the final state of an object at application termination.

Since these requirements have common functionality they are all implemented using the same mechanism: the

classes

InputObjectState

and

OutputObjectState

. The classes maintain an

internal array into which instances of the standard types can be contiguously packed or unpacked using appropriate

pack

or

unpack

operations. This buffer is automatically resized

as required should it have insufficient space. The instances are all stored in the buffer in a standard form

called

network byte order

, making them machine independent. Any other

architecture-independent format, such as XDR or ASN.1, can be implemented simply by replacing the operations with

ones appropriate to the encoding required.

Implementations of persistence can be affected by restrictions imposed by the Java SecurityManager. Therefore, the object store provided with ArjunaCore is implemented using the techniques of interface and implementation. The current distribution includes implementations which write object states to the local file system or database, and remote implementations, where the interface uses a client stub (proxy) to remote services.

Persistent objects are assigned unique identifiers, which are instances of the

Uid

class,

when they are created. These identifiers are used to identify them within the object store. States are read using

the

read_committed

operation and written by the

write_committed

and

write_uncommitted

operations.

At the root of the class hierarchy is the class

StateManager

.

StateManager

is responsible for object activation and

deactivation, as well as object recovery. Refer to

Example 1.1, “

Statemanager

”

for the simplified

signature of the class.

Example 1.1.

Statemanager

public abstract class StateManager

{

public boolean activate ();

public boolean deactivate (boolean commit);

public Uid get_uid (); // object’s identifier.

// methods to be provided by a derived class

public boolean restore_state (InputObjectState os);

public boolean save_state (OutputObjectState os);

protected StateManager ();

protected StateManager (Uid id);

};

Objects are assumed to be of three possible flavors.

Three Flavors of Objects

- Recoverable

-

StateManagerattempts to generate and maintain appropriate recovery information for the object. Such objects have lifetimes that do not exceed the application program that creates them. - Recoverable and Persistent

-

The lifetime of the object is assumed to be greater than that of the creating or accessing application, so that in addition to maintaining recovery information,

StateManagerattempts to automatically load or unload any existing persistent state for the object by calling theactivateordeactivateoperation at appropriate times. - Neither Recoverable nor Persistent

-

No recovery information is ever kept, nor is object activation or deactivation ever automatically attempted.

If an object is

recoverable

or

recoverable and persistent

, then

StateManager

invokes the operations

save_state

while performing

deactivate

, and

restore_state

while performing

activate

,) at various points during the execution of the application. These operations

must be implemented by the programmer since

StateManager

cannot detect user-level state

changes.

This gives the programmer the ability to decide which parts of an object’s state should be made

persistent. For example, for a spreadsheet it may not be necessary to save all entries if some values can simply

be recomputed. The

save_state

implementation for a class

Example

that has integer member variables called A, B and C might be implemented as in

Example 1.2, “

save_state

Implementation

”

.

Example 1.2.

save_state

Implementation

public boolean save_state(OutputObjectState o)

{

if (!super.save_state(o))

return false;

try

{

o.packInt(A);

o.packInt(B);

o.packInt(C));

}

catch (Exception e)

{

return false;

}

return true;

}

Note

it is necessary for all

save_state

and

restore_state

methods

to call

super.save_state

and

super.restore_state

. This is to

cater for improvements in the crash recovery mechanisms.

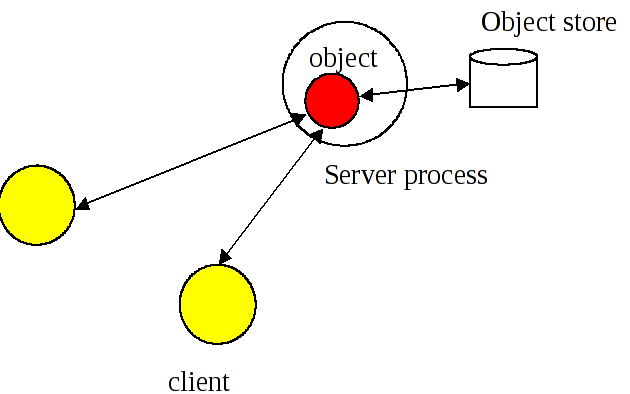

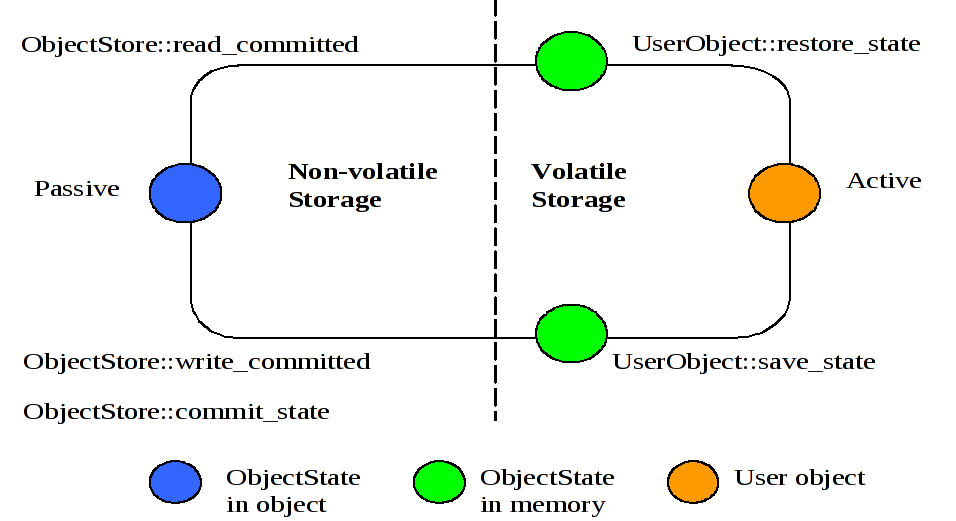

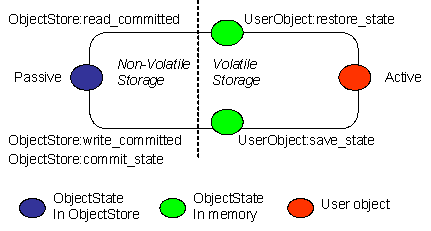

A persistent object not in use is assumed to be held in a passive state, with its state residing in an object store and activated on demand. The fundamental life cycle of a persistent object in TXOJ is shown in Figure 1.2, “Life cycle of a persistent Object in TXOJ” .

Figure 1.2. Life cycle of a persistent Object in TXOJ

-

The object is initially passive, and is stored in the object store as an instance of the class

OutputObjectState. -

When required by an application, the object is automatically activated by reading it from the store using a

read_committedoperation and is then converted from anInputObjectStateinstance into a fully-fledged object by therestore_stateoperation of the object. -

When the application has finished with the object, it is deactivated by converting it back into an

OutputObjectStateinstance using thesave_stateoperation, and is then stored back into the object store as a shadow copy usingwrite_uncommitted. This shadow copy can be committed, overwriting the previous version, using thecommit_stateoperation. The existence of shadow copies is normally hidden from the programmer by the transaction system. Object deactivation normally only occurs when the top-level transaction within which the object was activated commits

Note

During its life time, a persistent object may be made active then passive many times.

The concurrency controller is implemented by the class

LockManager

, which provides sensible

default behavior while allowing the programmer to override it if deemed necessary by the particular semantics of

the class being programmed. As with

StateManager

and persistence, concurrency control

implementations are accessed through interfaces. As well as providing access to remote services, the current

implementations of concurrency control available to interfaces include:

- Local disk/database implementation

-

Locks are made persistent by being written to the local file system or database.

- A purely local implementation

-

Locks are maintained within the memory of the virtual machine which created them. This implementation has better performance than when writing locks to the local disk, but objects cannot be shared between virtual machines. Importantly, it is a basic Java object with no requirements which can be affected by the SecurityManager.

The primary programmer interface to the concurrency controller is via the

setlock

operation. By default, the runtime system enforces strict two-phase locking following a multiple reader,

single

writer policy on a per object basis. However, as shown in

Figure 1.1, “ArjunaCore Class Hierarchy”

, by inheriting

from the

Lock

class, you can provide your own lock implementations with different lock

conflict rules to enable type specific concurrency control.

Lock acquisition is, of necessity, under programmer control, since just as

StateManager

cannot determine if an operation modifies an object,

LockManager

cannot determine if an

operation requires a read or write lock. Lock release, however, is under control of the system and requires no

further intervention by the programmer. This ensures that the two-phase property can be correctly maintained.

public class LockResult

{

public static final int GRANTED;

public static final int REFUSED;

public static final int RELEASED;

};

public class ConflictType

{

public static final int CONFLICT;

public static final int COMPATIBLE;

public static final int PRESENT;

};

public abstract class LockManager extends StateManager

{

public static final int defaultRetry;

public static final int defaultTimeout;

public static final int waitTotalTimeout;

public final synchronized boolean releaselock (Uid lockUid);

public final synchronized int setlock (Lock toSet);

public final synchronized int setlock (Lock toSet, int retry);

public final synchronized int setlock (Lock toSet, int retry, int sleepTime);

public void print (PrintStream strm);

public String type ();

public boolean save_state (OutputObjectState os, int ObjectType);

public boolean restore_state (InputObjectState os, int ObjectType);

protected LockManager ();

protected LockManager (int ot);

protected LockManager (int ot, int objectModel);

protected LockManager (Uid storeUid);

protected LockManager (Uid storeUid, int ot);

protected LockManager (Uid storeUid, int ot, int objectModel);

protected void terminate ();

};

The

LockManager

class is primarily responsible for managing requests to set a lock on an

object or to release a lock as appropriate. However, since it is derived from

StateManager

,

it can also control when some of the inherited facilities are invoked. For example,

LockManager

assumes that the setting of a write lock implies that the invoking operation

must be about to modify the object. This may in turn cause recovery information to be saved if the object is

recoverable. In a similar fashion, successful lock acquisition causes

activate

to be

invoked.

Example 1.3, “

Example

Class

”

shows how to try to obtain a write lock on an object.

Example 1.3.

Example

Class

public class Example extends LockManager

{

public boolean foobar ()

{

AtomicAction A = new AtomicAction;

boolean result = false;

A.begin();

if (setlock(new Lock(LockMode.WRITE), 0) == Lock.GRANTED)

{

/*

* Do some work, and TXOJ will

* guarantee ACID properties.

*/

// automatically aborts if fails

if (A.commit() == AtomicAction.COMMITTED)

{

result = true;

}

}

else

A.rollback();

return result;

}

}

The transaction protocol engine is represented by the

AtomicAction

class, which uses

StateManager

to record sufficient information for crash recovery mechanisms to complete the

transaction in the event of failures. It has methods for starting and terminating the transaction, and, for those

situations where programmers need to implement their own resources, methods for registering them with the current

transaction. Because ArjunaCore supports sub-transactions, if a transaction is begun within the scope of an already

executing transaction it will automatically be nested.

You can use ArjunaCore with multi-threaded applications. Each thread within an application can share a transaction or execute within its own transaction. Therefore, all ArjunaCore classes are also thread-safe.

Example 1.4. Relationships Between Activation, Termination, and Commitment

{

. . .

O1 objct1 = new objct1(Name-A);/* (i) bind to "old" persistent object A */

O2 objct2 = new objct2(); /* create a "new" persistent object */

OTS.current().begin(); /* (ii) start of atomic action */

objct1.op(...); /* (iii) object activation and invocations */

objct2.op(...);

. . .

OTS.current().commit(true); /* (iv) tx commits & objects deactivated */

} /* (v) */

- Creation of bindings to persistent objects

-

This could involve the creation of stub objects and a call to remote objects. Here, we re-bind to an existing persistent object identified by

Name-A, and a new persistent object. A naming system for remote objects maintains the mapping between object names and locations and is described in a later chapter. - Start of the atomic transaction

- Operation invocations

-

As a part of a given invocation, the object implementation is responsible to ensure that it is locked in read or write mode, assuming no lock conflict, and initialized, if necessary, with the latest committed state from the object store. The first time a lock is acquired on an object within a transaction the object’s state is acquired, if possible, from the object store.

- Commit of the top-level action

-

This includes updating of the state of any modified objects in the object store.

- Breaking of the previously created bindings

-

The principal classes which make up the class hierarchy of ArjunaCore are depicted below.

-

StateManager-

LockManager-

User-Defined Classes

-

-

Lock-

User-Defined Classes

-

-

AbstractRecord-

RecoveryRecord -

LockRecord -

RecordList -

Other management record types

-

-

-

AtomicAction-

TopLevelTransaction

-

-

Input/OutputObjectBuffer-

Input/OutputObjectState

-

-

ObjectStore

Programmers of fault-tolerant applications will be primarily concerned with the classes

LockManager

,

Lock

, and

AtomicAction

. Other

classes important to a programmer are

Uid

and

ObjectState

.

Most ArjunaCore classes are derived from the base class

StateManager

, which provides primitive

facilities necessary for managing persistent and recoverable objects. These facilities include support for the

activation and de-activation of objects, and state-based object recovery.

The class

LockManager

uses the facilities of

StateManager

and

Lock

to provide the concurrency control required for implementing the serializability

property of atomic actions. The concurrency control consists of two-phase locking in the current implementation.

The implementation of atomic action facilities is supported by

AtomicAction

and

TopLevelTransaction

.

Consider a simple example. Assume that

Example

is a user-defined persistent class suitably

derived from the

LockManager

. An application containing an atomic transaction

Trans

accesses an object called

O

of type

Example

,

by invoking the operation

op1

, which involves state changes to

O

. The serializability property requires that a write lock must be acquired on

O

before it is modified. Therefore, the body of

op1

should

contain a call to the

setlock

operation of the concurrency controller.

Example 1.5. Simple Concurrency Control

public boolean op1 (...)

{

if (setlock (new Lock(LockMode.WRITE) == LockResult.GRANTED)

{

// actual state change operations follow

...

}

}

Procedure 1.1.

Steps followed by the operation

setlock

The operation

setlock

, provided by the

LockManager

class,

performs the following functions in

Example 1.5, “Simple Concurrency Control”

.

-

Check write lock compatibility with the currently held locks, and if allowed, continue.

-

Call the StateManager operation

activate.activatewill load, if not done already, the latest persistent state ofOfrom the object store, then call theStateManageroperationmodified, which has the effect of creating an instance of eitherRecoveryRecordorPersistenceRecordforO, depending upon whetherOwas persistent or not. The Lock is a WRITE lock so the old state of the object must be retained prior to modification. The record is then inserted into the RecordList of Trans. -

Create and insert a

LockRecordinstance in theRecordListofTrans.

Now suppose that action

Trans

is aborted sometime after the lock has been acquired. Then

the

rollback

operation of

AtomicAction

will process the

RecordList

instance associated with

Trans

by invoking an

appropriate

Abort

operation on the various records. The implementation of this operation

by the

LockRecord

class will release the WRITE lock while that of

RecoveryRecord

or

PersistenceRecord

will restore the prior state of

O

.

It is important to realize that all of the above work is automatically being performed by ArjunaCore on behalf of the application programmer. The programmer need only start the transaction and set an appropriate lock; ArjunaCore and TXOJ take care of participant registration, persistence, concurrency control and recovery.

This section describes ArjunaCore and Transactional Objects for Java (TXOJ) in more detail, and shows how to use ArjunaCore to construct transactional applications.

Note: in previous releases ArjunaCore was often referred to as TxCore.

ArjunaCore needs to be able to remember the state of an object for several purposes, including recovery (the state represents some past state of the object), and for persistence (the state represents the final state of an object at application termination). Since all of these requirements require common functionality they are all implemented using the same mechanism - the classes Input/OutputObjectState and Input/OutputBuffer.

Example 1.6.

OutputBuffer

and

InputBuffer

public class OutputBuffer

{

public OutputBuffer ();

public final synchronized boolean valid ();

public synchronized byte[] buffer();

public synchronized int length ();

/* pack operations for standard Java types */

public synchronized void packByte (byte b) throws IOException;

public synchronized void packBytes (byte[] b) throws IOException;

public synchronized void packBoolean (boolean b) throws IOException;

public synchronized void packChar (char c) throws IOException;

public synchronized void packShort (short s) throws IOException;

public synchronized void packInt (int i) throws IOException;

public synchronized void packLong (long l) throws IOException;

public synchronized void packFloat (float f) throws IOException;

public synchronized void packDouble (double d) throws IOException;

public synchronized void packString (String s) throws IOException;

};

public class InputBuffer

{

public InputBuffer ();

public final synchronized boolean valid ();

public synchronized byte[] buffer();

public synchronized int length ();

/* unpack operations for standard Java types */

public synchronized byte unpackByte () throws IOException;

public synchronized byte[] unpackBytes () throws IOException;

public synchronized boolean unpackBoolean () throws IOException;

public synchronized char unpackChar () throws IOException;

public synchronized short unpackShort () throws IOException;

public synchronized int unpackInt () throws IOException;

public synchronized long unpackLong () throws IOException;

public synchronized float unpackFloat () throws IOException;

public synchronized double unpackDouble () throws IOException;

public synchronized String unpackString () throws IOException;

};

The

InputBuffer

and

OutputBuffer

classes maintain an internal

array into which instances of the standard Java types can be contiguously packed or unpacked, using the

pack

or

unpack

operations. This buffer is automatically

resized as required should it have insufficient space. The instances are all stored in the buffer in a

standard form called

network byte order

to make them machine independent.

Example 1.7.

OutputObjectState

and

InputObjectState

class OutputObjectState extends OutputBuffer

{

public OutputObjectState (Uid newUid, String typeName);

public boolean notempty ();

public int size ();

public Uid stateUid ();

public String type ();

};

class InputObjectState extends InputBuffer

{

public OutputObjectState (Uid newUid, String typeName, byte[] b);

public boolean notempty ();

public int size ();

public Uid stateUid ();

public String type ();

};

The

InputObjectState

and

OutputObjectState

classes provides all

the functionality of

InputBuffer

and

OutputBuffer

, through

inheritance, and add two additional instance variables that signify the Uid and type of the object for which

the

InputObjectStat

or

OutputObjectState

instance is a

compressed image. These are used when accessing the object store during storage and retrieval of the object

state.

The object store provided with ArjunaCore deliberately has a fairly restricted interface so that it can be implemented in a variety of ways. For example, object stores are implemented in shared memory, on the Unix file system (in several different forms), and as a remotely accessible store. More complete information about the object stores available in ArjunaCore can be found in the Appendix.

Note

As with all ArjunaCore classes, the default object stores are pure Java implementations. to access the shared memory and other more complex object store implementations, you need to use native methods.

All of the object stores hold and retrieve instances of the class

InputObjectState

or

OutputObjectState

. These instances are named by the Uid and Type of the object that they

represent. States are read using the

read_committed

operation and written by the system

using the

write_uncommitted

operation. Under normal operation new object states do not

overwrite old object states but are written to the store as shadow copies. These shadows replace the original

only when the

commit_state

operation is invoked. Normally all interaction with the

object store is performed by ArjunaCore system components as appropriate thus the existence of any shadow versions

of objects in the store are hidden from the programmer.

Example 1.8. StateStatus

public StateStatus

{

public static final int OS_COMMITTED;

public static final int OS_UNCOMMITTED;

public static final int OS_COMMITTED_HIDDEN;

public static final int OS_UNCOMMITTED_HIDDEN;

public static final int OS_UNKNOWN;

}

Example 1.9. ObjectStore

public abstract class ObjectStore

{

/* The abstract interface */

public abstract boolean commit_state (Uid u, String name)

throws ObjectStoreException;

public abstract InputObjectState read_committed (Uid u, String name)

throws ObjectStoreException;

public abstract boolean write_uncommitted (Uid u, String name,

OutputObjectState os) throws ObjectStoreException;

. . .

};

When a transactional object is committing, it must make certain state changes persistent, so it can

recover in

the event of a failure and either continue to commit, or rollback. When using

TXOJ

,

ArjunaCore will take care of this automatically. To guarantee

ACID

properties, these state

changes must be flushed to the persistence store implementation before the transaction can proceed to

commit. Otherwise, the application may assume that the transaction has committed when in fact the state changes

may still reside within an operating system cache, and may be lost by a subsequent machine failure. By default,

ArjunaCore ensures that such state changes are flushed. However, doing so can impose a significant performance

penalty on the application.

To prevent transactional object state flushes, set the

ObjectStoreEnvironmentBean.objectStoreSync

variable to

OFF

.

ArjunaCore comes with support for several different object store implementations. The Appendix

describes these implementations, how to select and configure a given implementation on a per-object

basis using

the

ObjectStoreEnvironmentBean.objectStoreType

property variable, and indicates how

additional implementations can be provided.

The ArjunaCore class

StateManager

manages the state of an object and provides all of the

basic support mechanisms required by an object for state management

purposes.

StateManager

is responsible for creating and registering appropriate

resources concerned with the persistence and recovery of the transactional object. If a transaction is nested,

then

StateManager

will also propagate these resources between child transactions and

their parents at commit time.

Objects are assumed to be of three possible flavors.

Three Flavors of Objects

- Recoverable

-

StateManagerattempts to generate and maintain appropriate recovery information for the object. Such objects have lifetimes that do not exceed the application program that creates them. - Recoverable and Persistent

-

The lifetime of the object is assumed to be greater than that of the creating or accessing application, so that in addition to maintaining recovery information,

StateManagerattempts to automatically load or unload any existing persistent state for the object by calling theactivateordeactivateoperation at appropriate times. - Neither Recoverable nor Persistent

-

No recovery information is ever kept, nor is object activation or deactivation ever automatically attempted.

This object property is selected at object construction time and cannot be changed thereafter. Thus an object cannot gain (or lose) recovery capabilities at some arbitrary point during its lifetime.

Example 1.10.

Object Store Implementation Using

StateManager

public class ObjectStatus

{

public static final int PASSIVE;

public static final int PASSIVE_NEW;

public static final int ACTIVE;

public static final int ACTIVE_NEW;

public static final int UNKNOWN_STATUS;

};

public class ObjectType

{

public static final int RECOVERABLE;

public static final int ANDPERSISTENT;

public static final int NEITHER;

};

public abstract class StateManager

{

public synchronized boolean activate ();

public synchronized boolean activate (String storeRoot);

public synchronized boolean deactivate ();

public synchronized boolean deactivate (String storeRoot, boolean commit);

public synchronized void destroy ();

public final Uid get_uid ();

public boolean restore_state (InputObjectState, int ObjectType);

public boolean save_state (OutputObjectState, int ObjectType);

public String type ();

. . .

protected StateManager ();

protected StateManager (int ObjectType, int objectModel);

protected StateManager (Uid uid);

protected StateManager (Uid uid, int objectModel);

. . .

protected final void modified ();

. . .

};

public class ObjectModel

{

public static final int SINGLE;

public static final int MULTIPLE;

};

If an object is recoverable or persistent,

StateManager

will invoke the operations

save_state

(while performing deactivation),

restore_state

(while performing activation), and

type

at various points during the execution of the

application. These operations must be implemented by the programmer since

StateManager

does not have access to a runtime description of the layout of an arbitrary Java object in memory

and thus

cannot implement a default policy for converting the in memory version of the object to its passive

form. However, the capabilities provided by

InputObjectState

and

OutputObjectState

make the writing of these routines fairly simple. For example, the

save_state

implementation for a class

Example

that had member

variables called

A

,

B

, and

C

could simply be

Example 1.11, “

Example Implementation of Methods for

StateManager

”

.

Example 1.11.

Example Implementation of Methods for

StateManager

public boolean save_state ( OutputObjectState os, int ObjectType )

{

if (!super.save_state(os, ObjectType))

return false;

try

{

os.packInt(A);

os.packString(B);

os.packFloat(C);

return true;

}

catch (IOException e)

{

return false;

}

}

In order to support crash recovery for persistent objects, all

save_state

and

restore_state

methods of user objects must call

super.save_state

and

super.restore_state

.

Note

The

type

method is used to determine the location in the object store where the

state of instances of that class will be saved and ultimately restored. This location can actually be any

valid string. However, you should avoid using the hash character (#) as this is reserved for special

directories that ArjunaCore requires.

The

get_uid

operation of

StateManager

provides read-only

access to an object’s internal system name for whatever purpose the programmer requires, such as registration

of the name in a name server. The value of the internal system name can only be set when an object is

initially constructed, either by the provision of an explicit parameter or by generating a new identifier when

the object is created.

The

destroy

method can be used to remove the object’s state from the object

store. This is an atomic operation, and therefore will only remove the state if the top-level transaction

within which it is invoked eventually commits. The programmer must obtain exclusive access to the object prior

to invoking this operation.

Since object recovery and persistence essentially have complimentary requirements (the only

difference being

where state information is stored and for what purpose),

StateManager

effectively

combines the management of these two properties into a single mechanism. It uses instances of the classes

InputObjectState

and

OutputObjectState

both for recovery and

persistence purposes. An additional argument passed to the

save_state

and

restore_state

operations allows the programmer to determine the purpose for which any

given invocation is being made. This allows different information to be saved for recovery and persistence

purposes.

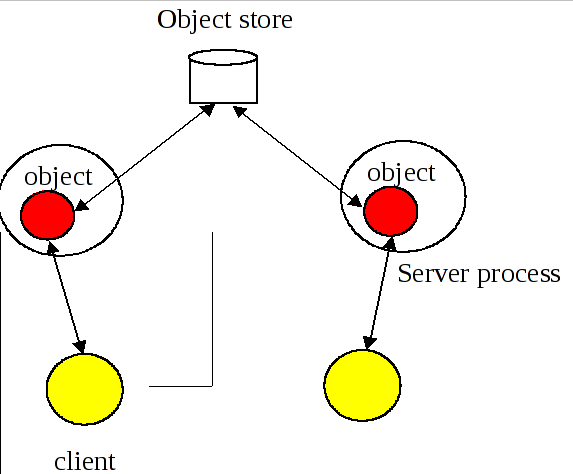

ArjunaCore supports two models for objects, which affect how an objects state and concurrency control are implemented.

ArjunaCore Object Models

- Single

-

Only a single copy of the object exists within the application. This copy resides within a single JVM, and all clients must address their invocations to this server. This model provides better performance, but represents a single point of failure, and in a multi-threaded environment may not protect the object from corruption if a single thread fails.

- Multiple

-

Logically, a single instance of the object exists, but copies of it are distributed across different JVMs. The performance of this model is worse than the SINGLE model, but it provides better failure isolation.

The default model is SINGLE. The programmer can override this on a per-object basis by using the appropriate constructor.

In summary, the ArjunaCore class

StateManager

manages the state of an object and provides

all of the basic support mechanisms required by an object for state management purposes. Some operations must

be defined by the class developer. These operations are:

save_state

,

restore_state

, and

type

.

-

boolean

save_state( OutputObjectStatestate, intobjectType) -

Invoked whenever the state of an object might need to be saved for future use, primarily for recovery or persistence purposes. The

objectTypeparameter indicates the reason thatsave_statewas invoked by ArjunaCore. This enables the programmer to save different pieces of information into theOutputObjectStatesupplied as the first parameter depending upon whether the state is needed for recovery or persistence purposes. For example, pointers to other ArjunaCore objects might be saved simply as pointers for recovery purposes but as Uid s for persistence purposes. As shown earlier, theOutputObjectStateclass provides convenient operations to allow the saving of instances of all of the basic types in Java. In order to support crash recovery for persistent objects it is necessary for allsave_statemethods to callsuper.save_state.save_stateassumes that an object is internally consistent and that all variables saved have valid values. It is the programmer's responsibility to ensure that this is the case. -

boolean

restore_state( InputObjectStatestate, intobjectType) -

Invoked whenever the state of an object needs to be restored to the one supplied. Once again the second parameter allows different interpretations of the supplied state. In order to support crash recovery for persistent objects it is necessary for all

restore_statemethods to callsuper.restore_state. -

String

type() -

The ArjunaCore persistence mechanism requires a means of determining the type of an object as a string so that it can save or restore the state of the object into or from the object store. By convention this information indicates the position of the class in the hierarchy. For example,

/StateManager/LockManager/Object.The

typemethod is used to determine the location in the object store where the state of instances of that class will be saved and ultimately restored. This can actually be any valid string. However, you should avoid using the hash character (#) as this is reserved for special directories that ArjunaCore requires.

Consider the following basic

Array

class derived from the

StateManager

class. In this example, to illustrate saving and restoring of an object’s

state, the

highestIndex

variable is used to keep track of the highest element of the array

that has a non-zero value.

Example 1.12.

Array

Class

public class Array extends StateManager

{

public Array ();

public Array (Uid objUid);

public void finalize ( super.terminate(); super.finalize(); };

/* Class specific operations. */

public boolean set (int index, int value);

public int get (int index);

/* State management specific operations. */

public boolean save_state (OutputObjectState os, int ObjectType);

public boolean restore_state (InputObjectState os, int ObjectType);

public String type ();

public static final int ARRAY_SIZE = 10;

private int[] elements = new int[ARRAY_SIZE];

private int highestIndex;

};

The save_state, restore_state and type operations can be defined as follows:

/* Ignore ObjectType parameter for simplicity */

public boolean save_state (OutputObjectState os, int ObjectType)

{

if (!super.save_state(os, ObjectType))

return false;

try

{

packInt(highestIndex);

/*

* Traverse array state that we wish to save. Only save active elements

*/

for (int i = 0; i <= highestIndex; i++)

os.packInt(elements[i]);

return true;

}

catch (IOException e)

{

return false;

}

}

public boolean restore_state (InputObjectState os, int ObjectType)

{

if (!super.restore_state(os, ObjectType))

return false;

try

{

int i = 0;

highestIndex = os.unpackInt();

while (i < ARRAY_SIZE)

{

if (i <= highestIndex)

elements[i] = os.unpackInt();

else

elements[i] = 0;

i++;

}

return true;

}

catch (IOException e)

{

return false;

}

}

public String type ()

{

return "/StateManager/Array";

}

Concurrency control information within ArjunaCore is maintained by locks. Locks which are required to be shared between objects in different processes may be held within a lock store, similar to the object store facility presented previously. The lock store provided with ArjunaCore deliberately has a fairly restricted interface so that it can be implemented in a variety of ways. For example, lock stores are implemented in shared memory, on the Unix file system (in several different forms), and as a remotely accessible store. More information about the object stores available in ArjunaCore can be found in the Appendix.

Note

As with all ArjunaCore classes, the default lock stores are pure Java implementations. To access the shared memory and other more complex lock store implementations it is necessary to use native methods.

Example 1.13.

LockStore

public class LockStore

{

public abstract InputObjectState read_state (Uid u, String tName)

throws LockStoreException;

public abstract boolean remove_state (Uid u, String tname);

public abstract boolean write_committed (Uid u, String tName,

OutputObjectState state);

};

ArjunaCore comes with support for several different object store implementations. If the object model being

used is

SINGLE, then no lock store is required for maintaining locks, since the information about the object is not

exported from it. However, if the MULTIPLE model is used, then different run-time environments (processes, Java

virtual machines) may need to share concurrency control information. The implementation type of the lock store

to use can be specified for all objects within a given execution environment using the

TxojEnvironmentBean.lockStoreType

property variable. Currently this can have one of the

following values:

- BasicLockStore

-

This is an in-memory implementation which does not, by default, allow sharing of stored information between execution environments. The application programmer is responsible for sharing the store information.

- BasicPersistentLockStore

-

This is the default implementation, and stores locking information within the local file system. Therefore execution environments that share the same file store can share concurrency control information. The root of the file system into which locking information is written is the

LockStoredirectory within the ArjunaCore installation directory. You can override this at runtime by setting theTxojEnvironmentBean.lockStoreDirproperty variable accordingly, or placing the location within theCLASSPATH.java -D TxojEnvironmentBean.lockStoreDir=/var/tmp/LockStore myprogram

java –classpath $CLASSPATH;/var/tmp/LockStore myprogram

If neither of these approaches is taken, then the default location will be at the same level as the

etcdirectory of the installation.

The concurrency controller is implemented by the class

LockManager

, which provides

sensible default behavior, while allowing the programmer to override it if deemed necessary by the particular

semantics of the class being programmed. The primary programmer interface to the concurrency controller is via

the

setlock

operation. By default, the ArjunaCore runtime system enforces strict two-phase

locking following a multiple reader, single writer policy on a per object basis. Lock acquisition is under

programmer control, since just as

StateManager

cannot determine if an operation modifies

an object,

LockManager

cannot determine if an operation requires a read or write

lock. Lock release, however, is normally under control of the system and requires no further intervention by the

programmer. This ensures that the two-phase property can be correctly maintained.

The

LockManager

class is primarily responsible for managing requests to set a lock on an

object or to release a lock as appropriate. However, since it is derived from

StateManager

, it can also control when some of the inherited facilities are invoked. For

example, if a request to set a write lock is granted, then

LockManager

invokes modified

directly assuming that the setting of a write lock implies that the invoking operation must be about to modify

the object. This may in turn cause recovery information to be saved if the object is recoverable. In a similar

fashion, successful lock acquisition causes activate to be invoked.

Therefore,

LockManager

is directly responsible for activating and deactivating persistent

objects, as well as registering

Resources

for managing concurrency control. By driving

the

StateManager

class, it is also responsible for registering

Resources

for persistent or recoverable state manipulation and object recovery. The

application programmer simply sets appropriate locks, starts and ends transactions, and extends the

save_state

and

restore_state

methods of

StateManager

.

Example 1.14.

LockResult

public class LockResult

{

public static final int GRANTED;

public static final int REFUSED;

public static final int RELEASED;

};

public class ConflictType

{

public static final int CONFLICT;

public static final int COMPATIBLE;

public static final int PRESENT;

};

public abstract class LockManager extends StateManager

{

public static final int defaultTimeout;

public static final int defaultRetry;

public static final int waitTotalTimeout;

public synchronized int setlock (Lock l);

public synchronized int setlock (Lock l, int retry);

public synchronized int setlock (Lock l, int retry, int sleepTime);

public synchronized boolean releaselock (Uid uid);

/* abstract methods inherited from StateManager */

public boolean restore_state (InputObjectState os, int ObjectType);

public boolean save_state (OutputObjectState os, int ObjectType);

public String type ();

protected LockManager ();

protected LockManager (int ObjectType, int objectModel);

protected LockManager (Uid storeUid);

protected LockManager (Uid storeUid, int ObjectType, int objectModel);

. . .

};

The

setlock

operation must be parametrized with the type of lock required (READ or

WRITE), and the number of retries to acquire the lock before giving up. If a lock conflict occurs, one of the

following scenarios will take place:

-

If the retry value is equal to

LockManager.waitTotalTimeout, then the thread which calledsetlockwill be blocked until the lock is released, or the total timeout specified has elapsed, and in whichREFUSEDwill be returned. -

If the lock cannot be obtained initially then

LockManagerwill try for the specified number of retries, waiting for the specified timeout value between each failed attempt. The default is 100 attempts, each attempt being separated by a 0.25 seconds delay. The time between retries is specified in micro-seconds. -

If a lock conflict occurs the current implementation simply times out lock requests, thereby preventing deadlocks, rather than providing a full deadlock detection scheme. If the requested lock is obtained, the

setlockoperation will return the value GRANTED, otherwise the valueREFUSEDis returned. It is the responsibility of the programmer to ensure that the remainder of the code for an operation is only executed if a lock request is granted. Below are examples of the use of the setlock operation.

Example 1.15.

setlock

Method Usage

res = setlock(new Lock(WRITE), 10); // Will attempt to set a

// write lock 11 times (10

// retries) on the object

// before giving up.

res = setlock(new Lock(READ), 0); // Will attempt to set a read

// lock 1 time (no retries) on

// the object before giving up.

res = setlock(new Lock(WRITE); // Will attempt to set a write

// lock 101 times (default of

// 100 retries) on the object

// before giving up.

The concurrency control mechanism is integrated into the atomic action mechanism, thus ensuring that as

locks

are granted on an object appropriate information is registered with the currently running atomic action to

ensure that the locks are released at the correct time. This frees the programmer from the burden of explicitly

freeing any acquired locks if they were acquired within atomic actions. However, if locks are acquired on an

object outside of the scope of an atomic action, it is the programmer's responsibility to release the locks when

required, using the corresponding

releaselock

operation.

Unlike many other systems, locks in ArjunaCore are not special system types. Instead they are simply

instances of

other ArjunaCore objects (the class

Lock

which is also derived from

StateManager

so that locks may be made persistent if required and can also be named in a

simple fashion). Furthermore,

LockManager

deliberately has no knowledge of the semantics

of the actual policy by which lock requests are granted. Such information is maintained by the actual

Lock

class instances which provide operations (the

conflictsWith

operation) by which

LockManager

can determine if two locks conflict or not. This

separation is important in that it allows the programmer to derive new lock types from the basic

Lock

class and by providing appropriate definitions of the conflict operations enhanced

levels of concurrency may be possible.

Example 1.16.

LockMode

Class

public class LockMode

{

public static final int READ;

public static final int WRITE;

};

public class LockStatus

{

public static final int LOCKFREE;

public static final int LOCKHELD;

public static final int LOCKRETAINED;

};

public class Lock extends StateManager

{

public Lock (int lockMode);

public boolean conflictsWith (Lock otherLock);

public boolean modifiesObject ();

public boolean restore_state (InputObjectState os, int ObjectType);

public boolean save_state (OutputObjectState os, int ObjectType);

public String type ();

. . .

};

The

Lock

class provides a

modifiesObject

operation which

LockManager

uses to determine if granting this locking request requires a call on

modified. This operation is provided so that locking modes other than simple read and write can be

supported. The supplied

Lock

class supports the traditional multiple reader/single writer

policy.

Recall that ArjunaCore objects can be recoverable, recoverable and persistent, or neither. Additionally each

object

possesses a unique internal name. These attributes can only be set when that object is constructed. Thus

LockManager

provides two protected constructors for use by derived classes, each of which

fulfills a distinct purpose

Protected Constructors Provided by

LockManager

-

LockManager () -

This constructor allows the creation of new objects, having no prior state.

-

LockManager( intobjectType, intobjectModel) -

As above, this constructor allows the creation of new objects having no prior state. exist. The

objectTypeparameter determines whether an object is simply recoverable (indicated byRECOVERABLE), recoverable and persistent (indicated byANDPERSISTENT), or neither (indicated byNEITHER). If an object is marked as being persistent then the state of the object will be stored in one of the object stores. The shared parameter only has meaning if it isRECOVERABLE. If the object model isSINGLE(the default behavior) then the recoverable state of the object is maintained within the object itself, and has no external representation). Otherwise an in-memory (volatile) object store is used to store the state of the object between atomic actions.Constructors for new persistent objects should make use of atomic actions within themselves. This will ensure that the state of the object is automatically written to the object store either when the action in the constructor commits or, if an enclosing action exists, when the appropriate top-level action commits. Later examples in this chapter illustrate this point further.

-

LockManager( UidobjUid) -

This constructor allows access to an existing persistent object, whose internal name is given by the

objUidparameter. Objects constructed using this operation will normally have their prior state (identified byobjUid) loaded from an object store automatically by the system. -

LockManager( UidobjUid, intobjectModel) -

As above, this constructor allows access to an existing persistent object, whose internal name is given by the

objUidparameter. Objects constructed using this operation will normally have their prior state (identified byobjUid) loaded from an object store automatically by the system. If the object model isSINGLE(the default behavior), then the object will not be reactivated at the start of each top-level transaction.

The finalizer of a programmer-defined class must invoke the inherited operation

terminate

to inform the state management mechanism that the object is about to be

destroyed. Otherwise, unpredictable results may occur.

Atomic actions (transactions) can be used by both application programmers and class developers. Thus entire operations (or parts of operations) can be made atomic as required by the semantics of a particular operation. This chapter will describe some of the more subtle issues involved with using transactions in general and ArjunaCore in particular.

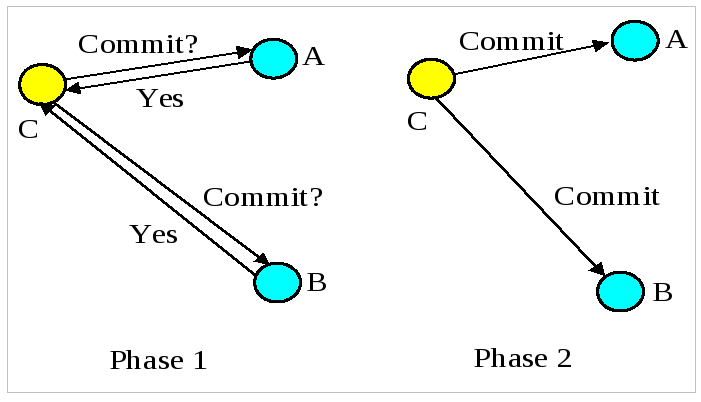

In some cases it may be necessary to enlist participants that are not two-phase commit aware into a two-phase commit transaction. If there is only a single resource then there is no need for two-phase commit. However, if there are multiple resources in the transaction, the Last Resource Commit Optimization (LRCO) comes into play. It is possible for a single resource that is one-phase aware (i.e., can only commit or roll back, with no prepare), to be enlisted in a transaction with two-phase commit aware resources. This feature is implemented by logging the decision to commit after committing the one-phase aware participant: The coordinator asks each two-phase aware participant if they are able to prepare and if they all vote yes then the one-phase aware participant is asked to commit. If the one-phase aware participant commits successfully then the decision to commit is logged and then commit is called on each two-phase aware participant. A heuristic outcome will occur if the coordinator fails before logging its commit decision but after the one-phase participant has committed since each two-phase aware participant will eventually rollback (as required under presumed abort semantics). This strategy delays the logging of the decision to commit so that in failure scenarios we have avoided a write operation. But this choice does mean that there is no record in the system of the fact that a heuristic outcome has occurred.

In order to utilize the LRCO, your participant must implement the

com.arjuna.ats.arjuna.coordinator.OnePhase

interface and be registered with the

transaction through the

BasicAction.add

operation. Since this operation expects instances

of

AbstractRecord

, you must create an instance of

com.arjuna.ats.arjuna.LastResourceRecord

and give your participant as the constructor

parameter.

Example 1.17.

Class

com.arjuna.ats.arjuna.LastResourceRecord

try

{

boolean success = false;

AtomicAction A = new AtomicAction();

OnePhase opRes = new OnePhase(); // used OnePhase interface

System.out.println("Starting top-level action.");

A.begin();

A.add(new LastResourceRecord(opRes));

A.add( "other participants" );

A.commit();

}

In some situations the application thread may not want to be informed of heuristics during completion. However, it is possible some other component in the application, thread or admin may still want to be informed. Therefore, special participants can be registered with the transaction which are triggered during the Synchronization phase and given the true outcome of the transaction. We do not dictate a specific implementation for what these participants do with the information (e.g., OTS allows for the CORBA Notification Service to be used).

To use this capability, create classes derived from the HeuristicNotification class and define the heuristicOutcome method to use whatever mechanism makes sense for your application. Instances of this class should be registered with the tranasction as Synchronizations.

There are no special constructs for nesting of transactions. If an action is begun while another action is running then it is automatically nested. This allows for a modular structure to applications, whereby objects can be implemented using atomic actions within their operations without the application programmer having to worry about the applications which use them, and whether or not the applications will use atomic actions as well. Thus, in some applications actions may be top-level, whereas in others they may be nested. Objects written in this way can then be shared between application programmers, and ArjunaCore will guarantee their consistency.

If a nested action is aborted, all of its work will be undone, although strict two-phase locking means that any locks it may have obtained will be retained until the top-level action commits or aborts. If a nested action commits then the work it has performed will only be committed by the system if the top-level action commits. If the top-level action aborts then all of the work will be undone.

The committing or aborting of a nested action does not automatically affect the outcome of the action within which it is nested. This is application dependent, and allows a programmer to structure atomic actions to contain faults, undo work, etc.

By default, the Transaction Service executes the

commit

protocol of a top-level

transaction in a synchronous manner. All registered resources will be told to prepare in order by a single thread,

and then they will be told to commit or rollback. A similar comment

applies to the volatile phase of the protocol which provides a

synchronization mechanism that allows an interested party to be notified

before and after the transaction completes. This has several possible

disadvantages:

-

In the case of many registered synchronizations, the

beforeSynchronizationoperation can logically be invoked in parallel on each non interposed synchronization (and similary for the interposed synchronizations). The disadvantage is that if an “early” synchronization in the list of registered resource forces a rollback by throwing an unchecked exception, possibly many beforeCompletion operations will have been made needlessly. -

In the case of many registered resources, the

prepareoperation can logically be invoked in parallel on each resource. The disadvantage is that if an “early” resource in the list of registered resource forces a rollback duringprepare, possibly many prepare operations will have been made needlessly. -

In the case where heuristic reporting is not required by the application, the second phase of the commit protocol (including any afterCompletion synchronizations) can be done asynchronously, since its success or failure is not important to the outcome of the transaction.

Therefore, Narayana

provides runtime options to enable possible threading optimizations. By setting the

CoordinatorEnvironmentBean.asyncBeforeSynchronization

environment variable to

YES

, during the

beforeSynchronization

phase a separate thread

will be created for each synchronization registered with the transaction.

By setting the

CoordinatorEnvironmentBean.asyncPrepare

environment variable to

YES

, during the

prepare

phase a separate thread will be created for

each registered participant within the transaction. By setting

CoordinatorEnvironmentBean.asyncCommit

to

YES

, a separate thread will be

created to complete the second phase of the transaction provided knowledge about heuristics outcomes is not required.

By setting the

CoordinatorEnvironmentBean.asyncAfterSynchronization

environment variable to

YES

, during the

afterSynchronization

phase a separate thread

will be created for each synchronization registered with the transaction

provided knowledge about heuristics outcomes is not required.

In addition to normal top-level and nested atomic actions, ArjunaCore also supports independent top-level actions, which can be used to relax strict serializability in a controlled manner. An independent top-level action can be executed from anywhere within another atomic action and behaves exactly like a normal top-level action. Its results are made permanent when it commits and will not be undone if any of the actions within which it was originally nested abort.

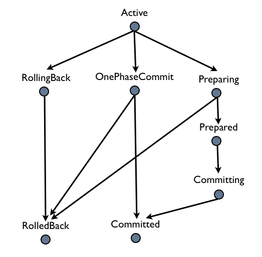

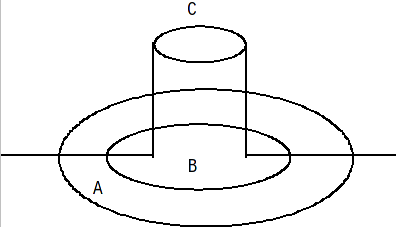

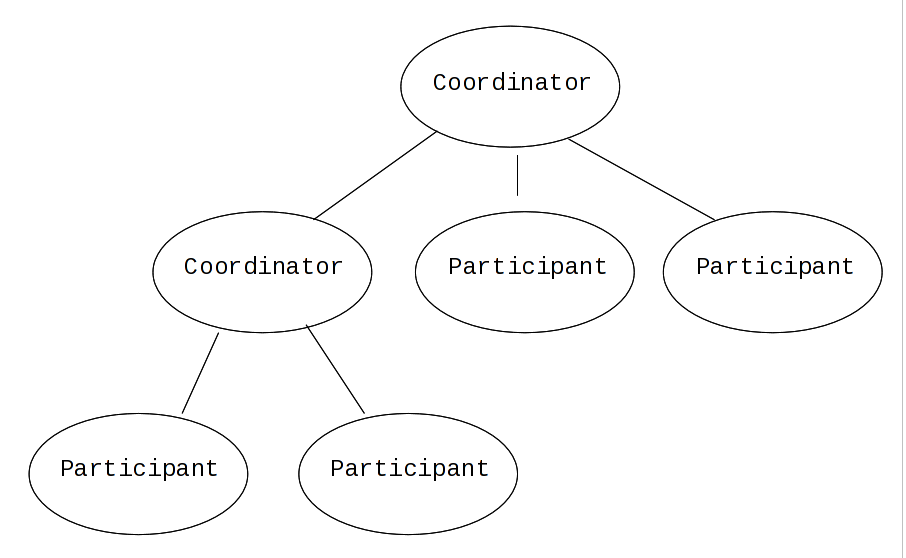

Figure 1.5. Independent Top-Level Action

a typical nesting of atomic actions, where action B is nested within action A. Although atomic action C is logically nested within action B (it had its Begin operation invoked while B was active) because it is an independent top-level action, it will commit or abort independently of the other actions within the structure. Because of the nature of independent top-level actions they should be used with caution and only in situations where their use has been carefully examined.

Top-level actions can be used within an application by declaring and using instances of the class

TopLevelTransaction

. They are used in exactly the same way as other transactions.

Exercise caution when writing the

save_state

and

restore_state

operations to ensure that no atomic actions are started, either explicitly in the operation or implicitly

through

use of some other operation. This restriction arises due to the fact that ArjunaCore may invoke

restore_state

as part of its commit processing resulting in the attempt to execute an

atomic action during the commit or abort phase of another action. This might violate the atomicity properties of

the action being committed or aborted and is thus discouraged.

Example 1.18.

If we consider the

Example 1.12, “

Array

Class

”

given previously, the

set

and

get

operations could be implemented as shown below.

This is a simplification of the code, ignoring error conditions and exceptions.

public boolean set (int index, int value)

{

boolean result = false;

AtomicAction A = new AtomicAction();

A.begin();

// We need to set a WRITE lock as we want to modify the state.

if (setlock(new Lock(LockMode.WRITE), 0) == LockResult.GRANTED)

{

elements[index] = value;

if ((value > 0) &&(index > highestIndex

highestIndex = index;

A.commit(true);

result = true;

}

else

A.rollback();

return result;

}

public int get (int index) // assume -1 means error

{

AtomicAction A = new AtomicAction();

A.begin();

// We only need a READ lock as the state is unchanged.

if (setlock(new Lock(LockMode.READ), 0) == LockResult.GRANTED)

{

A.commit(true);

return elements[index];

}

else

A.rollback();

return -1;

}

Java objects are deleted when the garbage collector determines that they are no longer required. Deleting an object that is currently under the control of a transaction must be approached with caution since if the object is being manipulated within a transaction its fate is effectively determined by the transaction. Therefore, regardless of the references to a transactional object maintained by an application, ArjunaCore will always retain its own references to ensure that the object is not garbage collected until after any transaction has terminated.

By default, transactions live until they are terminated by the application that created them or a failure occurs. However, it is possible to set a timeout (in seconds) on a per-transaction basis such that if the transaction has not terminated before the timeout expires it will be automatically rolled back.

In ArjunaCore, the timeout value is provided as a parameter to the

AtomicAction

constructor. If a value of

AtomicAction.NO_TIMEOUT

is provided (the default) then the

transaction will not be automatically timed out. Any other positive value is assumed to be the timeout for the

transaction (in seconds). A value of zero is taken to be a global default timeout, which can be provided by the

property

CoordinatorEnvironmentBean.defaultTimeout

, which has a default value of 60 seconds.

Note

Default timeout values for other Narayana components, such as JTS, may be different and you should consult the relevant documentation to be sure.

When a top-level transaction is created with a non-zero timeout, it is subject to being rolled back if it has not completed within the specified number of seconds. Narayana uses a separate reaper thread which monitors all locally created transactions, and forces them to roll back if their timeouts elapse. If the transaction cannot be rolled back at that point, the reaper will force it into a rollback-only state so that it will eventually be rolled back.

By default this thread is dynamically scheduled to awake according to the timeout values for any

transactions

created, ensuring the most timely termination of transactions. It may alternatively be configured to awake at a

fixed interval, which can reduce overhead at the cost of less accurate rollback timing. For periodic operation,

change the

CoordinatorEnvironmentBean.txReaperMode

property from its default value of

DYNAMIC

to

PERIODIC

and set the interval between runs, in milliseconds,

using the property

CoordinatorEnvironmentBean.txReaperTimeout

. The default interval in

PERIODIC

mode is 120000 milliseconds.

Warning

In earlier versions the

PERIODIC

mode was known as

NORMAL

and was the

default behavior. The use of the configuration value

NORMAL

is deprecated and

PERIODIC

should be used instead if the old scheduling behavior is still required.

If a value of

0

is specified for the timeout of a top-level transaction, or no timeout is

specified, then Narayana

will not impose any timeout on the transaction, and the transaction will

be allowed to run indefinitely. This default timeout can be overridden by setting the

CoordinatorEnvironmentBean.defaultTimeout

property variable when using to the required timeout

value in seconds, when using ArjunaCore, ArjunaJTA or ArjunaJTS.

Note

As of JBoss Transaction Service 4.5, transaction timeouts have been unified across all transaction components and are controlled by ArjunaCore.

If you want to be informed when a transaction is rolled back or forced into a rollback-only mode by the

reaper,

you can create a class that inherits from class

com.arjuna.ats.arjuna.coordinator.listener.ReaperMonitor

and overrides the

rolledBack

and

markedRollbackOnly

methods. When registered

with the reaper via the

TransactionReaper.addListener

method, the reaper will invoke

one of these methods depending upon how it tries to terminate the transaction.

Note

The reaper will not inform you if the transaction is terminated (committed or rolled back) outside of its control, such as by the application.

Examples throughout this manual use transactions in the implementation of constructors for new persistent objects. This is deliberate because it guarantees correct propagation of the state of the object to the object store. The state of a modified persistent object is only written to the object store when the top-level transaction commits. Thus, if the constructor transaction is top-level and it commits, the newly-created object is written to the store and becomes available immediately. If, however, the constructor transaction commits but is nested because another transaction that was started prior to object creation is running, the state is written only if all of the parent transactions commit.

On the other hand, if the constructor does not use transactions, inconsistencies in the system can arise. For example, if no transaction is active when the object is created, its state is not saved to the store until the next time the object is modified under the control of some transaction.

Example 1.19. Nested Transactions In Constructors

AtomicAction A = new AtomicAction();

Object obj1;

Object obj2;

obj1 = new Object(); // create new object

obj2 = new Object("old"); // existing object

A.begin(0);

obj2.remember(obj1.get_uid()); // obj2 now contains reference to obj1

A.commit(true); // obj2 saved but obj1 is not

The two objects are created outside of the control of the top-level action

A

.

obj1

is a new object.

obj2

is an

old existing object. When the

remember

operation of

obj2

is

invoked, the object will be activated and the

Uid

of

obj1

remembered. Since this action commits, the persistent state of

obj2

may now contain the

Uid

of

obj1

. However, the state of

obj1

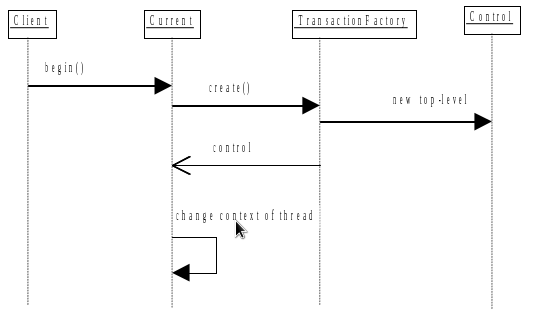

itself